Digital Accessibility, Apple Presents New Features for 2025

May 15 is Global Accessibility Awareness Day, an international day celebrated every year this month to raise awareness on the issue of digital accessibility. Apple started the division that deals with the development of these solutions 40 years ago. A path that followed the technological and device development: the arrival of voice over on iPhone in 2009, the “MFi hearing aids”. “Made for iPhone hearing aids” — that is, hearing aids compatible with iPhone in 2014, the “accessibility reader” mode to facilitate reading for users with visual or cognitive disabilities last year and so on.

For 2025, it has introduced a series of new features, coming later this year, starting with accessibility cards on the App Store, then Lens for Mac, Braille Access and Accessibility Reader, and more. Throughout May, there will be dedicated tables in some Apple stores to highlight accessibility features on various devices. In addition to Today at Apple sessions dedicated to this throughout the year.

“Accessibility is part of Apple’s DNA,” said Tim Cook, Apple’s CEO. “Creating technology that works for all people is a priority for us, and we’re excited about the innovations we’re delivering this year. We’re offering tools to help people access critical information, explore the world around them, and do the things they love.”

“At Apple, we have 40 years of accessibility innovation, and we’re committed to continuing to push the envelope by introducing new accessibility features across all of our products,” said Sarah Herrlinger, Apple’s senior director of Global Accessibility Policy and Initiatives. “Using the power of the Apple ecosystem, these features work together seamlessly to give people new ways to interact with the things they care about.”

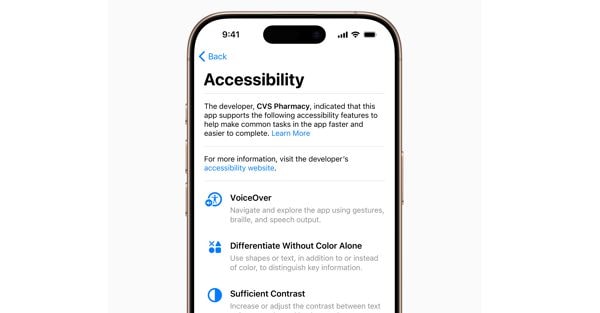

Accessibility Cards introduce a new section on App Store product pages that will highlight accessibility features within apps and games. These cards will give users a new way to find out if an app is accessible before downloading it, giving developers the opportunity to better inform and explain to people what features their app supports. These include VoiceOver, Voice Control, Larger Text, Enough Contrast, Reduce Motion, Transcriptions, and more.

Accessibility cards for apps

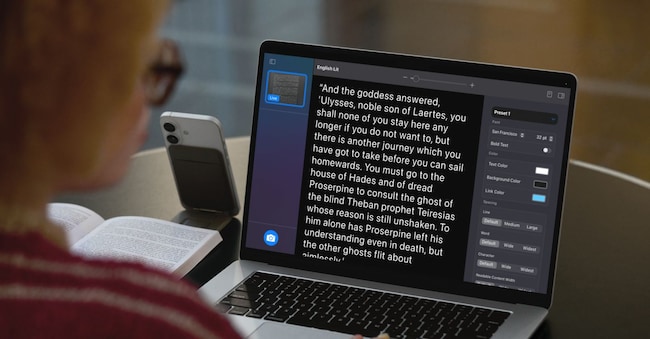

Since 2016, the Lens app on iPhone and iPad has given people who are blind or have low vision tools to zoom in, read text, and detect objects around them. This year, Lens comes to Mac to make the real world more accessible to people with low vision. The Lens app for Mac connects to your camera, allowing you to magnify anything around you, like a screen or whiteboard. Lens works with the Continuity camera on iPhone and connected USB cameras, and supports reading documents using Desktop Overview.

With multiple live session windows, users can multitask by viewing a presentation with a webcam while simultaneously following a book with Desktop Overview. With custom views, users can adjust brightness, contrast, color filters, and even perspective to make text and images easier to read. They can also capture, group, and save views to work on later. Additionally, the Lens app for Mac integrates another new accessibility feature, Accessibility Reader, which transforms real-world text into a customized readable format.

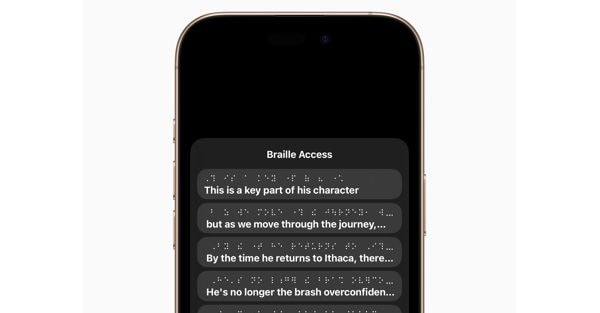

Braille reading

Braille Access transforms iPhone, iPad, Mac and Apple Vision Pro into complete note-taking tools for braille, the tactile reading and writing system primarily used by people who are blind or have low vision. With a built-in app launcher, users can easily open any app by typing using Braille Screen Input or a connected braille device. With Braille Access, users can quickly take braille notes and perform calculations using Nemeth braille code, often used in science and math classrooms. Users can open Braille Ready Format (BRF) files directly from Braille Access, unlocking a wide range of books and files previously created on devices that support braille note-taking. And with a built-in version of Live Transcription, users can transcribe conversations in real time directly to braille displays.

Accessibility Reader is a new system-wide reading experience designed to make reading easier for people with disabilities, such as dyslexia or low vision. Available on iPhone, iPad, Mac, and Apple Vision Pro, Accessibility Reader gives people new ways to customize text and focus on the content they want to read, with more options for font, color, and spacing, as well as support for “Read Content Aloud.” Accessibility Reader can be launched from any app and is integrated into the Lens app for iOS, iPadOS, and macOS, allowing users to interact with text in the real world, such as books or restaurant menus.

Designed for people who are deaf or hard of hearing, Live Listen controls come to Apple Watch with a new set of features, including real-time Live Transcripts. Live Listen turns iPhone into a remote microphone to stream content directly to AirPods, Beats headphones or Made for iPhone hearing aids. When a session is active on iPhone, users can view Live Transcripts of content detected by iPhone on a paired Apple Watch while listening to the audio. Apple Watch acts as a remote to start or stop Live Listen sessions, or go back to a session to catch up on anything they may have missed. With Apple Watch, users can control Live Listen sessions from across the room, without having to get up in the middle of a meeting or lecture.

For people who are blind or have low vision, visionOS will expand vision accessibility features using the advanced camera system in Apple Vision Pro. With powerful updates to the Zoom feature, users will be able to magnify anything they can see, including their surroundings, using the main camera. For those who use VoiceOver, Real-time Recognition in visionOS uses on-device machine learning to describe their surroundings, find objects, read documents and more.

News and insights on political, economic and financial events.

Sign upilsole24ore